Volume 4 Issue 2

Insertion site assessment of peripherally inserted central catheters: Inter-observer agreement between nurses and inpatients

Joan Webster, Sarah Northfield, Emily N Larsen, Nicole Marsh, Claire M Rickard, Raymond J Chan

Abstract

Introduction: Many patients are discharged from hospital with a peripherally inserted central catheter in place. Monitoring the peripherally inserted central catheter insertion site for clinical and research purposes is important for identifying complications, but the extent to which patients can reliably report the condition of their catheter insertion site is uncertain. The aim of this study was to assess the inter-observer agreement between nurses and patients when assessing a peripherally inserted central catheter site.

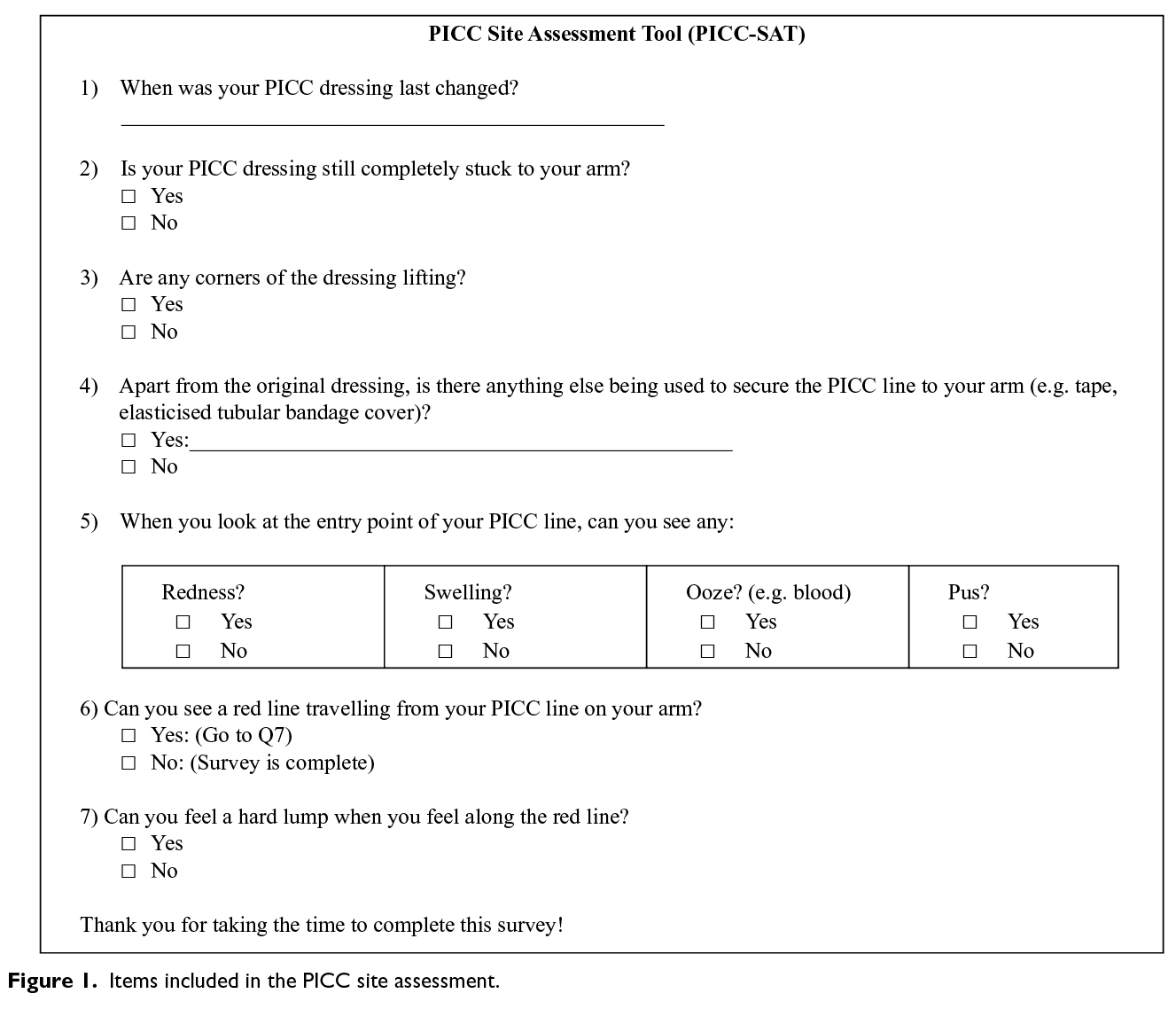

Methods: The study was based on inpatients who were enrolled in a single-centre, randomised controlled trial comparing four different dressing and securement devices for peripherally inserted central catheter sites. A seven-item peripherally inserted central catheter site assessment tool, containing questions about the condition of the dressing and the insertion site, was developed. Assessment was conducted once by the research nurse and, within a few minutes, independently by the patient. Proportions of agreement and Cohen’s kappa were calculated.

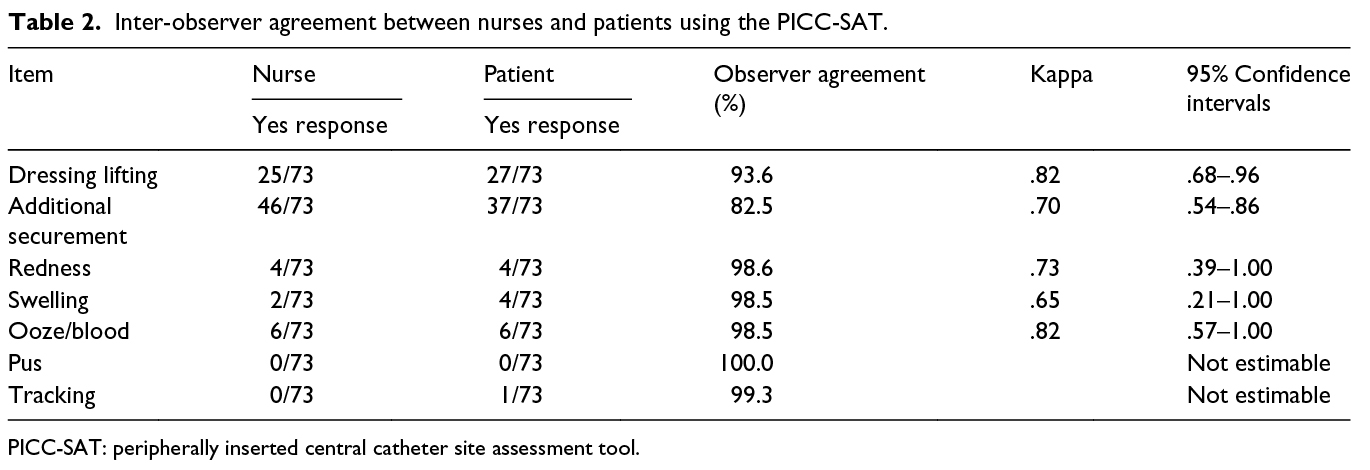

Results: In total, 73 patients agreed to participate. Overall, percentage agreement ranged from 83% to 100% (kappa = .65–.82). For important clinical signs (redness, swelling, ooze, pus and tracking), there were high levels of percentage agreement (99%–100%).

Conclusion: The high level of agreement between nurse–patient pairs make the instrument useful for assessing peripherally inserted central catheter–associated signs of localised infection, allergic or irritant dermatitis or dressing dislodgement in a community setting.

Keywords: Observer variation, central venous catheters, inter-observer agreement, patient-reported outcomes.

Date received: 25 July 2017; accepted: 17 December 2017.

Republished with permission: The Journal of Vascular Access 2018;19(4):370–374. © The Author(s) 2018

Reprints and permissions: sagepub.co.uk/journalsPermissions.nav DOI: 10.1177/1129729818757965

https://doi.org/10.1177/1129729818757965 journals.sagepub.com/home/jva

![]()

BACKGROUND

Due to innovations in medical techniques, models of care and care processes, the average length of stay in acute hospitals has been steadily falling1. These efficiencies have led to many more patients receiving post-hospital care, through schemes such as ‘hospital-in-the-home’ or other forms of community care2. In addition, patients may also be referred directly to community services from emergency departments, avoiding hospital admission altogether3. While these new models of care may lead to shorter lengths of stay and reduced inpatient-related costs4, they pose significant problems for researchers and clinicians, who may need to monitor patients for longer periods of time, but without the high cost associated with in-home visits.

A common medical intervention that is increasingly being transferred to community care is the administration of long-term antibiotics, chemotherapies or other parenteral therapies5,6. These therapies are frequently administered through a peripherally inserted central catheter (PICC), where rates of failure, due to occlusion, thrombosis, accidental removal, suspected blood stream infection and so on, may be as high as 15%7. Patients receiving such therapies may also be enrolled in clinical trial/s, which require frequent monitoring of the insertion site. For cancer patients, who are immunocompromised due to their antineoplastic therapy, such monitoring is particularly important. As a consequence, patients usually return to the hospital for assessment, involving financial, physical and social burden. One solution for long-term catheter-related follow-up would be patient-reported outcomes, but this is problematic if such measures have not been validated.

We know from inter-observer agreement studies that concordance between health care professionals is low for many conditions8,9. However, in related work, an acceptable level of inter-observer agreement was found when two nurses independently rated the condition of peripheral intravenous catheter (PIVC) sites10. The high level of agreement between two nurse raters may have been established because both were experienced intravenous (IV) access researchers or it may be that complications associated with an IV access site are less ambiguous than for other catheters such as PICCs.

At our hospital, approximately one-quarter of patients who have a PICC line inserted are outpatients. So, to reduce the burden of hospital visits, we were interested to understand the reliability of patient’s own assessment of their PICC insertion site. If assessment was found to be consistent between the patient and an expert assessor, it would allow us to use such patient-reported outcome data with confidence for both clinical and research purposes. Consequently, the aim of this study was to assess the inter-observer agreement between a nurse, experienced in management of IV access devices, and a patient, enrolled in a clinical trial.

METHODS

Participants and setting

The study centre was a tertiary referral teaching hospital with over 900 beds, located in South East Queensland, Australia. Between March 2014 and March 2015, 124 patients admitted to cancer care, medical or surgical wards, and who required a PICC were recruited to a single-centre, pilot randomised controlled trial (RCT). The four-arm trial was designed to test the feasibility of processes for a larger RCT, comparing the effectiveness of PICC dressings and securement devices. The trial’s primary outcome was any reason that led to catheter failure (catheter-associated blood stream infection, local infection, total or partial dislodgement, occlusion, thrombosis and/or PICC fracture). As part of the trial, we conducted an inter-observer agreement study of enrolled patients. The study protocol for the RCT and for the inter-observer agreement study was approved by the ethics committees of the hospital (HREC/13QRBW/454) and university (NRS/10/14/HREC). We registered the trial with the Australian and New Zealand Clinical Trials Registry (ACTRN12616000027415).

Data collection

For the inter-observer agreement study, a seven-item PICC site assessment tool (PICC-SAT) was developed by the investigators, based on recommended criteria for dressing integrity11 and commonly recognised signs and symptoms for phlebitis and local infection12. The tool contained four items to assess the integrity of the dressing and three items to measure visible and palpable signs of infection and inflammation (Figure 1). All core items required a yes/no answer. We also included one optional item, which asked participants to name any additional product used to secure the dressing (e.g. tape and/or elasticised tubular bandage). Face validity was initially tested with six nurses experienced with IV access. Subsequently, five patients were asked to assess the instrument for readability and comprehension. Following feedback from these groups, wording for some questions was slightly modified. During the RCT, the PICC site was inspected daily by a research nurse, to check for protocol compliance and study outcomes. During one of these assessment visits, and at least two days after PICC insertion, the research nurse (an experienced registered nurse with training in PICC site assessment) asked the patient for consent to participate in the inter-observer agreement sub-study. If the patient agreed, the site assessment was conducted once by the research nurse and, within a few minutes, independently by the patient who was blinded to the nurse's assessment.

Analysis

First, we used observer agreement as suggested by De Vet et al.14 because it is the most appropriate way to establish an absolute measure of agreement between two people rating categorical variables such as ‘present or yes’ and ‘not present or no’. Using a two-by-two table, observer agreement is calculated by dividing the number of concordant observations with the total number of observations. Second, we calculated the Cohen’s kappa, which is a relative measure or a measure of reliability and is arguably the most frequent test used to assess observer variation. For the kappa statistic, we accepted common cut-off points: 0.0–0.2 (slight agreement), 0.21–0.4 (fair agreement), 0.41–0.6 (moderate agreement), 0.6–0.8 (substantial agreement) and more than 0.8 (almost perfect or prefect agreement)15. Only one paired assessment per patient was included in the analysis. All data were analysed using SPSS version 23 software.

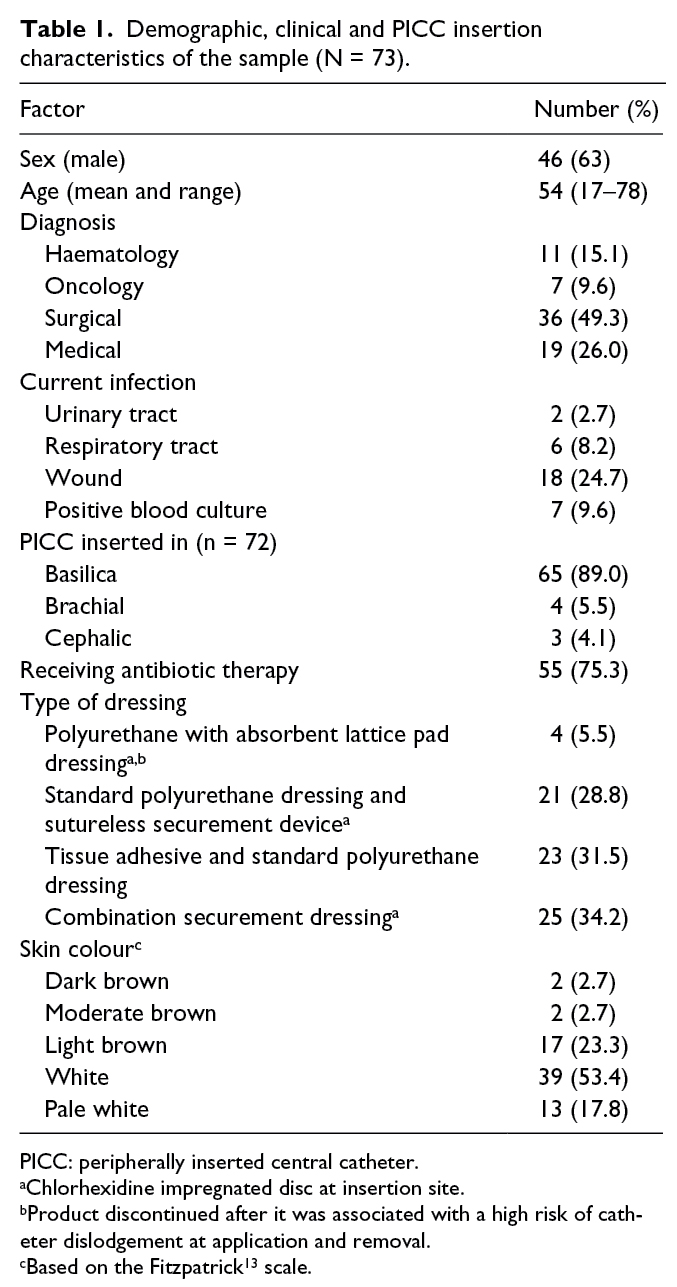

RESULTS

Of the original RCT cohort of 124 patients, 73 (58.9%) agreed to participate in the inter-observer agreement study. Reasons for not participating included being too unwell, being confused, having been discharged from hospital or transferred to another facility before testing could be undertaken or simply declining. The majority of participants were male (63%) and had been admitted for surgery (49%). A total of 33 (45%) patients had a pre-existing infection prior to line insertion, and 55 (75%) patients were receiving IV antibiotics. The vein accessed most frequently for PICC insertion was the basilica. Apart from the absorbent lattice pad dressing, which was withdrawn from the study due to a high risk of catheter dislodgement on application and removal, the spread of dressing types was similar among respondents. Table 1 shows details of demographic and insertion characteristics.

Inter-observer agreement

When assessing the PICC access site inter-observer agreement between nurses and patients, the percentage agreement ranged from 83% to 100%. However, for the clinical signs (redness, swelling, ooze, pus and tracking), there was a higher level of agreement (99–100%). All kappa scores were in the categories ‘substantial agreement’ or ‘almost perfect’ agreement. Details are shown in Table 2.

DISCUSSION

This is the first report of patients’ ability to assess the condition of their PICC insertion site, using a newly developed instrument, the PICC-SAT. Results are encouraging, with a high correlation between patient and nurse assessments of the site. The tested cohort was a heterogeneous group of acute hospital inpatients, representative of those who would generally be included in trials investigating issues associated with central venous access devices (CVADs). Additionally, the items on the PICC-SAT are the same as those required to assess the condition of the insertion site of a PIVC; so, we believe that the instrument may be useful for clinical monitoring and research studies requiring follow-up of patients, with PIVCs in situ, after they are discharged from hospital.

Patient-reported outcome measures are becoming increasingly important in clinical trials16. Such data are usually collected by questionnaire, either completed by the patient or an interviewer. When patients complete a questionnaire without a face-to-face or other method of direct communication, the instrument needs to be easily understood, simple to complete and reliable. We have shown that the PICC-SAT meets these conditions and is suitable for use in clinical trial situations when the participant has left hospital. Moreover, involving patients in their own assessment may facilitate their engagement in RCTs, improve data retrieval and increase retention rates. These are important considerations when trying to meet clinical trial end points.

On average, 15% of PICCs7 and 25% of all CVADs fail prior to the completion of treatment17. Such a high failure rate could be associated with many factors, including the increasing reliance on patients and families to monitor and manage invasive devices at home, with little support or education. Our study found that the PICC-SAT is a reliable and easy-to-use assessment tool, indicating its application could be appropriate to support patients with CVADs receiving IV therapy in outpatient or hospital-in-the-home services. It provides patients with a clear reference guide to identify potential signs of infection and other complications and may facilitate early and timely interventions.

Differences in the agreement levels between the proportion of observer agreement and the kappa statistic shown in our study are not unusual, with similar differences being shown in other studies that have reported the two statistical methods18,19. The reason for the difference is that the kappa accounts for chance agreements, whereas the proportion of observer agreements fails to consider this possibility20. Confidence intervals for all kappa results were wide, indicating a level of uncertainty around the results. For example, the true kappa for the item ‘redness’ could be as low as .39 or as high as 1.0. The wide confidence intervals are a reflection of the relatively small sample size and a low incidence of positive endpoints.

STUDY LIMITATIONS

The study was conducted in one acute care hospital, so it would be useful to validate the results in other settings and with other populations, particularly outpatients. Although the study was relatively small, with only 73 participants, the level of proportion agreement between nurses and patients was high. Confidence intervals around the kappa estimates were wide; this was because there were few positive responses, so a larger study would be useful to improve certainty around all estimates. We were unable to calculate the kappa statistic for two items (presence of pus and tracking); this was because there were no reports of pus and only one report of tracking, this by a patient and not the nurse. Finally, pain/tenderness is the most frequently reported symptom of peripheral IV catheterisation10. If the instrument were to be used as PIVC a patient-reported outcome, it would be useful to include a pain scale.

CONCLUSION

We have demonstrated substantial agreement between expert vascular access nurses and patients for the clinical items on the PICC-SAT, making the instrument useful for patients in the home setting to assess for possible signs of localised infection or dressing failure associated with PICC insertion sites.

Declaration of conflicting interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship and/or publication of this article.

Funding

This study was supported by the Royal Brisbane and Women’s Hospital Foundation.

Vascular Access — Call for Papers

THE DEADLINE FOR SUBMISSIONS IS 15 JANUARY 2019.

The Australian Vascular Access Society (AVAS) is an association of health care professionals founded to promote the vascular access specialty (http://avas.org.au/). Our multidisciplinary membership strives to advance vascular access research, promotes professional and public education to shape practice and enhance patient outcomes, and partners with industry to develop evidence-based innovations in vascular access.

The electronic journal Vascular Access is the official publication of AVAS, and provides a venue for national and international scholars and practitioners to publish high-quality peer-reviewed research and educational reviews relevant to vascular access in Australia and globally. The journal also provides a space for evidence-based discussions and debate on issues of importance to patients requiring vascular access.

Vascular Access is published twice a year (April and October) and manuscripts pertaining to this specialty are invited. The editor welcomes manuscripts in the form of research findings, clinical papers, case studies, reports, review articles, letters and product appraisals. Video submissions are also welcomed. Submissions will be accepted from any country, but must be in English.

For more information, please see the Author Guidelines or contact the Editor at g.ray-barruel@griffith.edu.au

Author(s)

*Joan Webster Royal Brisbane and Women’s Hospital, Herston, QLD, Australia Alliance for Vascular Access Teaching and Research (AVATAR), Menzies Health Institute Queensland, Griffith University, Nathan, QLD, Australia. Queensland University of Technology, Kelvin Grove, QLD, Australia. Postal address: Royal Brisbane and Women’s Hospital, Level 2, Building 34, Butterfield Street, Herston, QLD 4029, Australia. Email: joan.webster@health.qld.gov.au Sarah Northfield Royal Brisbane and Women’s Hospital, Herston, QLD, Australia Queensland University of Technology, Kelvin Grove, QLD, Australia Emily N Larsen Royal Brisbane and Women’s Hospital, Herston, QLD, Australia Alliance for Vascular Access Teaching and Research (AVATAR), Menzies Health Institute Queensland Griffith University, Nathan, QLD, Australia Nicole Marsh Royal Brisbane and Women’s Hospital, Herston, QLD, Australia Alliance for Vascular Access Teaching and Research (AVATAR), Menzies Health Institute Queensland Griffith University, Nathan, QLD, Australia Claire M Rickard Royal Brisbane and Women’s Hospital, Herston, QLD, Australia Alliance for Vascular Access Teaching and Research (AVATAR), Menzies Health Institute Queensland Griffith University, Nathan, QLD, Australia Raymond J Chan Royal Brisbane and Women’s Hospital, Herston, QLD, Australia Alliance for Vascular Access Teaching and Research (AVATAR), Menzies Health Institute Queensland Griffith University, Nathan, QLD, Australia Queensland University of Technology, Kelvin Grove, QLD, Australia *Corresponding author Pages 3-7

References

- National Center for Health Statistics. Health, United States, 2015. Hyattsville, MD: National Center for Health Statistics, 2015.

- MacIntyre CR, Ruth D & Ansari Z. Hospital in the home is cost saving for appropriately selected patients: a comparison with in-hospital care. Int J Qual Health Care 2002; 14(4):285–293.

- Crilly J, Chaboyer W, Wallis M et al. An outcomes evaluation of an Australian hospital in the nursing home admission avoidance programme. J Clin Nurs 2011; 20(7–8):1178–1187.

- Varney J, Weiland TJ & Jelinek G. Efficacy of hospital in the home services providing care for patients admitted from emergency departments: an integrative review. Int J Evid Based Healthc 2014; 12(2):128–141.

- Hodgson KA, Huynh J, Ibrahim LF et al. The use, appropriateness and outcomes of outpatient parenteral antimicrobial therapy. Arch Dis Child 2016; 101(10):886–893.

- Miron-Rubio M, Gonzalez-Ramallo V, Estrada-Cuxart O et al. Intravenous antimicrobial therapy in the hospital-at-home setting: data from the Spanish outpatient parenteral antimicrobial therapy registry. Future Microbiol 2016; 11(3):375–390.

- Bertoglio S, Faccini B, Lalli L et al. Peripherally inserted central catheters (PICCs) in cancer patients under chemotherapy: a prospective study on the incidence of complications and overall failures. J Surg Oncol 2016; 113(6):708–714.

- Durand AC, Gentile S, Gerbeaux P et al. Be careful with triage in emergency departments: interobserver agreement on 1,578 patients in France. BMC Emerg Med 2011; 11:19.

- DeLaney MC, Page DB, Kunstadt EB et al. Inability of physicians and nurses to predict patient satisfaction in the emergency department. West J Emerg Med 2015; 16(7): 1088–1093.

- Marsh N, Mihala G, Ray-Barruel G et al. Inter-rater agreement on PIVC-associated phlebitis signs, symptoms and scales. J Eval Clin Pract 2015; 21(5):893–899.

- Infusion Nurses Society. Infusion nursing standards of practice. J Infus Nurs 2011; 29(1 Suppl):S1–S92.

- Ray-Barruel G, Polit DF, Murfield JE et al. Infusion phlebitis assessment measures: a systematic review. J Eval Clin Pract 2014; 20(2):191–202.

- Fitzpatrick TB. The validity and practicality of sun-reactive skin types I through VI. Arch Dermatol 1988; 124(6):869–871.

- De Vet HC, Mokkink LB, Terwee CB et al. Clinicians are right not to like Cohen’s κ. BMJ 2013; 346 f2125.

- Landis R and Koch G. The measurement of observer agreement for categorical data. Biometrics 1977; 33(1):159–174.

- Kyte D, Ives J, Draper H et al. Current practices in patient-reported outcome (PRO) data collection in clinical trials: a cross-sectional survey of UK trial staff and management. BMJ Open 2016; 6(10):e012281.

- Ullman AJ, Marsh N, Mihala G et al. Complications of central venous access devices: a systematic review. Pediatrics 2015; 136(5):e1331–e1144.

- Varmdal T, Ellekjaer H, Fjaertoft H et al. Inter-rater reliability of a national acute stroke register. BMC Res Notes 2015; 8:584.

- Haviland JS, Hopwood P, Mills J et al. Do patient-reported outcome measures agree with clinical and photographic assessments of normal tissue effects after breast radiotherapy? The experience of the standardisation of breast radiotherapy (START) trials in early breast cancer. Clin Oncol 2016; 28(6):345–353.

- McHugh ML. Interrater reliability: the kappa statistic. Biochem Med 2012; 22(3):276–282.